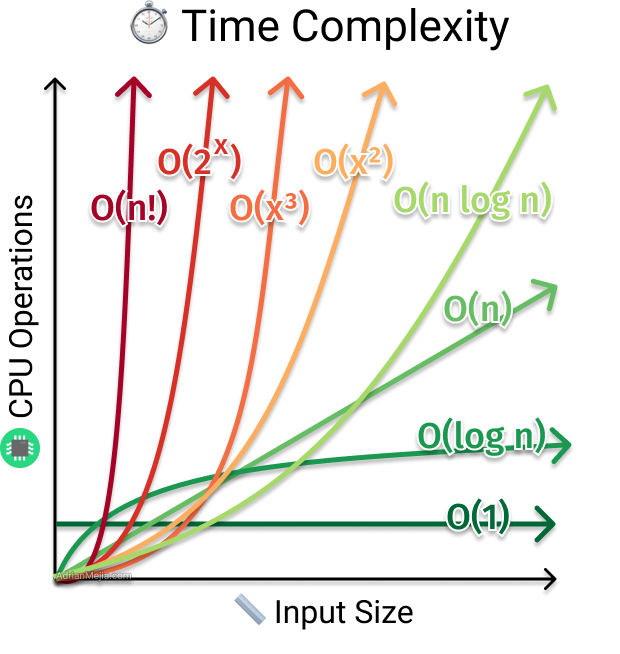

Time Complexity Big O

Most of us have heard the term Big O. We all know its related to time complexity. Time complexity is also one of the most frequently used word in software programming especially when designing algorithms.

What is time complexity and what is this Big O exactly?

Firstly Big O stands for Big Order function and is a framework to analyze and compare algorithms.

We know that CPU is the one who executes the statements. The Big O gives us the ability to compare the execution time based on change in input parameter size which will enable us to analyze and compare algorithms.

Understanding Big O with an example

Let us understand it with an example.

for greeting in ["hello", "hello2", "hello3"]:

print(greeting) # assuming it takes 2 seconds to print.In the above example let us assume it takes 2 seconds to print the statement. So for the given list the total time taken to finish greeting is 2*size of the list i.e 6 seconds. If we increase the input size i.e list size to 100 then the total time taken would be 200 seconds.

From the above example we can come to a conclusion that the execution time depends directly on the size of the input and if we were to represent the time taken and input size in an equation it would be something this

T = K*n where n is input size and K is a constant like 2 in our example

Usually a programme has many loops and statements and the same simple function with additional nested loops and statements will look like below.

T = K1*n2 + K2*n + K3

In such scenarios we take the higher order of the function and this is what we call Big Order function.

So the Big O convention of the same equation T = K1*n2 + K2*n + K3 would be O(n2)

Big O considerations

- As for the constants we ommit the constants since they don’t effect the trend and has no effect on trend. In short constants doesn’t matter.

- Big O cares about the worst-case scenario only hence we pick the highest order of the function. In short lower orders doesn’t matter.